- Published on

A Guide to the Quality Assurance Testing Process

- Authors

- Name

- Gabriel

- @gabriel__xyz

The quality assurance testing process isn't just about finding bugs at the last minute. It's a systematic approach to weaving quality into every single stage of the software development lifecycle. Think of it as a continuous effort to prevent defects, not just find them, ensuring the final product nails user expectations, business goals, and technical specs.

Building Quality Into Your Software Blueprint

Imagine you're the architect of a massive skyscraper. Would you wait until the last brick is laid to check if the foundation is sound or if the wiring is up to code? Of course not. You’d validate every single component at each stage of construction.

That's exactly how the quality assurance testing process works. It provides a constant stream of validation, from the first napkin sketch of an idea all the way to the final release.

This proactive mindset completely shifts the focus from "finding defects" to "preventing them." It's a collaborative game where developers, product managers, and QA pros work side-by-side to build quality into the product from day one. When you get this right, a solid QA process becomes the true backbone of a high-performing development team.

Why a Proactive QA Process Matters

Trying to bolt on testing at the very end is a recipe for disaster. It almost always leads to delayed releases, blown budgets, and a brand reputation that takes a serious hit. On the other hand, a well-defined quality assurance process builds confidence and delivers some pretty tangible benefits.

- Boosts Stakeholder Confidence: When you consistently deliver quality software on a predictable schedule, stakeholders see that the project is under control and on its way to hitting its goals.

- Protects Brand Reputation: All it takes is one critical bug slipping into production to instantly shatter user trust. A robust QA process is your best defense against those kinds of public failures.

- Drives User Satisfaction: Let's be honest, users love software that just works. When a product is reliable, intuitive, and bug-free, you get happier users, better adoption rates, and glowing reviews.

- Increases Team Efficiency: When quality becomes a shared responsibility, developers start catching their own mistakes earlier. The whole team spends less time on frustrating rework and more time building cool new things.

To create products that are both robust and reliable, it's crucial to understand and implement essential quality assurance procedures. These procedures are the building blocks of any successful QA strategy.

To give you a clearer picture, let's break down the core components that form a modern QA strategy. These pillars work together to create a comprehensive and effective testing process.

Pillars of a Modern QA Strategy

| Pillar | Description | Primary Goal |

|---|---|---|

| Process Integration | Embedding QA activities directly into every phase of the development lifecycle, not just at the end. | To prevent defects from ever being introduced and foster a culture of shared quality. |

| Risk-Based Testing | Prioritizing testing efforts on areas of the application that pose the greatest risk to the business. | To maximize the impact of testing resources by focusing on what matters most. |

| Automation | Using tools to automate repetitive, time-consuming tests, like regression and performance testing. | To increase testing speed, improve accuracy, and free up human testers for complex tasks. |

| Continuous Feedback | Establishing tight feedback loops between QA, development, and product teams to quickly address issues. | To accelerate the bug-fix cycle and ensure everyone is aligned on quality standards. |

These pillars aren't just buzzwords; they represent a fundamental shift in how we think about building software. They move QA from a final gatekeeper to an integrated partner in creating excellent products.

Key Takeaway: Modern QA isn't a separate department that just says "no." It's an integrated practice that empowers the entire team to build better software, faster. It creates a culture where everyone owns quality.

Ultimately, the quality assurance testing process is more than just a list of checks and balances—it's a mindset. It’s about making sure that every feature, every line of code, and every user interaction adds up to a product that is not only functional but also dependable and even delightful to use. This foundation is what sets the stage for the specific lifecycle stages that turn this philosophy into practice.

The 7 Stages of the QA Testing Lifecycle

The quality assurance testing process isn't a single, monolithic event. It's a structured journey, more like a well-oiled assembly line for quality. Each station has a specific purpose, building on the work of the previous one to methodically and comprehensively validate the software.

To make this real, let's walk through the lifecycle with a practical example: testing a new one-click checkout feature for an e-commerce website. This feature has to be fast, secure, and absolutely bulletproof.

1. Requirement Analysis

Before anyone writes a single line of test code, the QA team has to wrap their heads around what they're actually testing. This initial stage is a deep dive into the requirements documents, user stories, and design specs. The goal isn't just to read them—it's to poke and prod them, looking for gaps in logic, ambiguities, and anything that isn't testable.

For our e-commerce checkout, the team would be asking tough questions. What payment methods are we supporting? How does the system react if a credit card is expired? What exact confirmation message should the user see? Nailing these details down upfront saves a ton of headaches and ensures the tests actually reflect what the business wants.

2. Test Planning

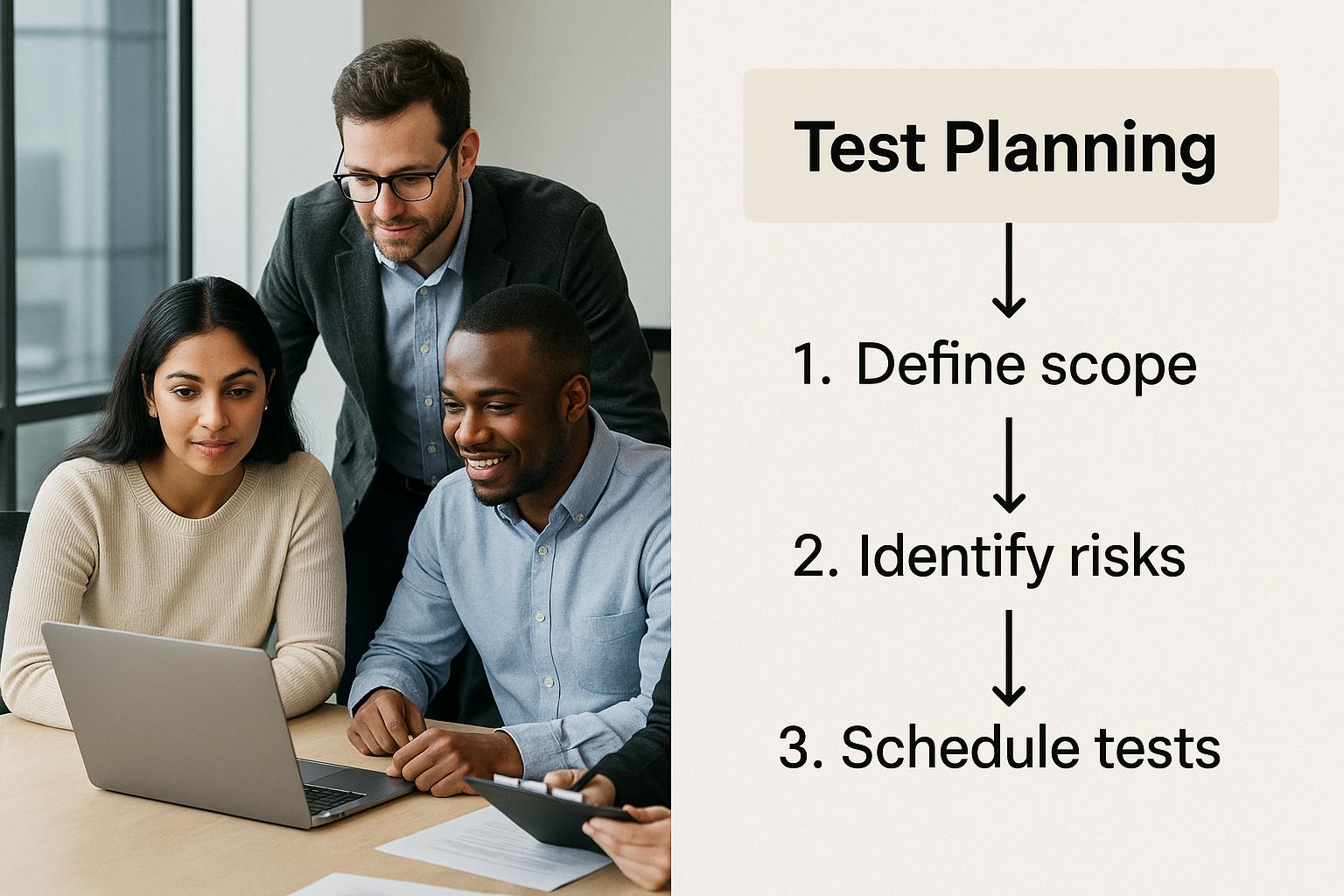

With a solid grasp of the requirements, it's time to build a strategic roadmap. The test plan is the formal document that lays out the entire testing effort. It’s the master blueprint for the whole operation, defining the scope, objectives, resources, schedule, and what "success" even looks like.

This plan gets into the nitty-gritty, detailing which types of testing are needed (functional, performance, security, etc.) and who is responsible for what. For our one-click checkout, the plan would specify testing across different browsers, define the expected server load, and set a timeline for when each phase needs to kick off and wrap up.

This image shows a team collaborating on the test planning stage—a critical step for defining the roadmap and getting everyone on the same page.

As the image shows, smart planning is the bedrock of a successful QA process, setting clear goals for the team. To see the bigger picture of where QA fits, understanding and mastering the software release lifecycle is a must.

3. Test Case Development

This is where the plan becomes reality. The QA team starts writing detailed, step-by-step test cases. Each one is a specific script designed to check a single piece of functionality or a requirement. Think of it as a recipe for a tester to follow.

A good test case includes a few key ingredients:

- Test Case ID: A unique name for tracking.

- Description: A quick summary of what's being tested.

- Prerequisites: What needs to be true before you even start.

- Test Steps: The exact actions the tester will take.

- Expected Results: What should happen if the software works correctly.

For our checkout feature, one test case might be "Verify successful purchase with a valid Visa card." It would include steps for logging in, adding an item, clicking the checkout button, and an expected result of seeing the "Thank You" page. Another test case would focus on a failure, like what happens when a user tries to apply an invalid coupon code.

4. Test Environment Setup

You can't test software in a vacuum. The test environment is a dedicated server or platform that mimics the live production environment as closely as possible, complete with all the right hardware, software, and network configurations. Getting this setup right is absolutely critical.

A stable test environment is non-negotiable. If it's flaky or poorly configured, tests can fail because of infrastructure problems instead of actual software bugs. That leads to false alarms and wastes a ton of valuable time and resources.

For our e-commerce feature, this means spinning up a dedicated server with the application, a database filled with sample products and users, and connections to mock payment gateways.

5. Test Execution

With the stage set and the scripts written, it's showtime. Test execution is the phase where testers actually run the test cases they prepared. They follow each step meticulously, comparing what actually happens to what was supposed to happen, and document everything.

If a test passes, it gets marked. If it fails—meaning the actual result was different from the expected one—the tester records the failure and gets ready for the next crucial stage.

6. Defect Management

When a test fails, a defect (or bug, if you prefer) is born. The defect management process is the system for logging, tracking, and squashing these issues. A solid defect report includes a detailed description, steps to reproduce the bug, its severity level, and often a screenshot or two.

Once logged in a tool like Jira, the defect is assigned to a developer. The dev works their magic, fixes the bug, and sends the updated code back to the QA team. The team then re-tests to make sure the fix worked and didn't break anything else (regression testing). This back-and-forth continues until the defect is officially closed.

7. Test Cycle Closure

Finally, once all the tests are run and the most critical defects are resolved, the lifecycle wraps up with test cycle closure. This isn't just about stopping; it's about reflecting and reporting back. The QA team puts together a summary report that typically includes:

- Total tests executed

- Number of tests that passed and failed

- Defect density and a breakdown of severity

- An overall assessment of the software's quality

This report is what helps stakeholders make the go/no-go decision on whether the software is ready for release. It also provides invaluable feedback that can be used to make the next testing cycle even better.

How Cloud Environments and Autonomous Data Transform Testing

The old way of doing quality assurance testing was often bogged down by two massive headaches: setting up complicated test environments and getting your hands on realistic, safe test data. These hurdles didn't just slow down development; they severely limited how much you could actually test. But today, two major shifts are completely changing the game, making modern QA faster, more scalable, and a whole lot more secure.

Think about what it used to take to test an application across dozens of different operating systems, browsers, and mobile devices. You needed a physical lab filled with expensive, high-maintenance hardware. It was a huge bottleneck. Cloud-based testing environments have completely wiped that problem off the map.

Now, QA teams can spin up virtual environments on demand. Need to check for a bug on an old version of Firefox running on a specific Windows build? It’s a matter of a few clicks, not hours or days of painstaking setup. This kind of agility is essential for any team that takes continuous integration and delivery seriously.

The Power of Cloud-Based Testing

Moving your testing infrastructure to the cloud isn't just a small tweak—it delivers some serious advantages that directly boost the efficiency of your entire quality assurance process.

- Unmatched Scalability: You can run thousands of tests in parallel across a nearly infinite number of configurations. This shrinks the time it takes to get feedback from days to minutes.

- Cost-Effectiveness: Forget about the steep costs of buying, storing, and maintaining all that physical hardware. With the cloud, you only pay for the resources you actually use.

- Centralized Management: All your test results and reports are pulled into one single dashboard. This gives your team a clear, unified view of product quality across every platform you support.

- Seamless CI/CD Integration: Cloud testing platforms are designed from the ground up to plug right into CI/CD pipelines, making automated testing a smooth, hands-off part of every single build.

This shift has made comprehensive, large-scale testing a reality for everyone, from scrappy startups to massive enterprises.

Solving the Test Data Dilemma

Of course, even the most perfect test environment is useless without good data. Using real production data is a non-starter—it’s a massive security and privacy risk, especially if you work in regulated industries like finance or healthcare. On the other hand, creating data manually is painfully slow and rarely covers all the tricky edge cases.

This is where autonomous test data generation steps in. This technology uses AI and sophisticated models to create huge volumes of realistic, privacy-compliant synthetic data whenever you need it.

This isn't just about generating fake names and addresses. The technology understands complex data relationships, mimics real-world usage patterns, and ensures the data is statistically identical to what your app will face in production.

This is a monumental leap forward for QA. Teams are no longer stuck using sanitized, outdated copies of production data. Instead, they can generate fresh, relevant, and completely secure data sets tailored for any scenario, whether it's a performance test or a full regression suite. By automating data creation, you remove one of the biggest dependencies that has traditionally slowed QA to a crawl.

The combination of cloud environments and autonomous data is a defining trend in quality assurance. The cloud provides the agility to test on any device without physical hardware, while autonomous data solves the critical need for realistic, compliant test data. As you can see from recent insights on quality assurance trends from Qualityze, this is especially vital for regulated industries where data security is non-negotiable.

By embracing these technologies, organizations aren't just improving one small part of their workflow—they're fundamentally transforming the entire quality assurance testing process. They're breaking down old barriers, empowering teams to test more thoroughly, release faster, and build higher-quality software with more confidence than ever before.

Integrating Automation into Your QA Strategy

A common myth floating around development circles is that automation is gunning for the QA tester's job. The reality? It’s far more strategic. Automation doesn't replace skilled testers—it empowers them. Think of it as a tireless assistant that handles the tedious, repetitive work, freeing up human experts to focus on what they do best.

Imagine your QA team is a group of expert chefs. Manual testing is like tasting the soup for flavor balance and nuance, a task that demands human intuition and experience. Automation is like having a high-tech food processor that can chop a mountain of vegetables in minutes. It's a job that would otherwise exhaust the chefs and waste their valuable time.

The secret to a successful quality assurance process is knowing which tasks to hand off to the processor and which ones demand the chef's palate. A smart automation strategy is all about amplifying human intelligence, not replacing it.

Identifying Prime Candidates for Automation

Not all tests are created equal, and trying to automate everything is a common—and costly—rookie mistake. You want to automate the tasks that are repetitive, predictable, and time-consuming. These are the tests that deliver a high return on investment because they can run quickly and consistently, day or night, without anyone having to lift a finger.

Excellent choices for automation include:

- Regression Testing: This is the big one. These tests ensure that new code changes haven't accidentally broken existing features. Manually running hundreds, or even thousands, of regression tests before every single release is wildly impractical. It’s the perfect job for automation.

- Load and Performance Testing: Trying to simulate thousands of users hitting your application all at once is something a human team just can't do. Automated scripts, however, can generate this heavy load with ease, helping you find performance bottlenecks and breaking points before your customers do.

- Data-Driven Testing: Need to run the same test with hundreds of different data inputs? Think of testing a login form with every possible combination of valid and invalid credentials. Automation is far more efficient and less prone to human error for this kind of work.

This strategic approach to automation is already changing how teams operate. In fact, a recent study highlighted that 46% of testing teams report automation has replaced 50% or more of their manual testing efforts. This frees up a massive amount of time, letting organizations tackle more complex testing challenges. You can find more insights like this in these test automation statistics on Testlio.com.

The Irreplaceable Value of Manual Testing

While automation is a beast at handling repetitive tasks, certain types of testing absolutely require a human touch. These are the areas where judgment, creativity, and empathy are everything. A machine can follow a script to the letter, but it can't tell you if an application feels intuitive or if a workflow is just plain confusing for a new user.

Here are the critical areas where manual testing reigns supreme:

- Exploratory Testing: This is an unscripted, creative free-for-all where testers poke and prod the application to find bugs that a predefined test case would almost certainly miss. It relies entirely on the tester's curiosity, experience, and knack for breaking things.

- Usability Testing: Evaluating the user experience (UX) means watching how real people interact with the software. An automated script can't give you feedback on a confusing layout, awkward navigation, or whether the design is visually appealing. That requires a person.

- Ad-Hoc Testing: This is all about spontaneous, informal testing without any specific plan. The goal is to try and break the system with random, unexpected actions—something humans are uniquely good at.

A mature quality assurance process embraces this balanced approach. It combines the sheer speed and scale of automation with the critical thinking and insight of manual testers. For teams already using GitHub and Slack, you can learn more about how to enhance your code quality checks with GitHub Actions and Slack to support this hybrid model even further.

By integrating these practices, teams create a powerful, multi-layered defense against bugs and deliver a much better product.

The Growing Impact of AI in Quality Assurance

The world of quality assurance is in the middle of a massive shake-up, all thanks to Artificial Intelligence (AI) and Machine Learning (ML). These aren't just buzzwords or some far-off concepts anymore. They are actively reshaping the testing landscape right now, pushing QA from a reactive, manual chore into a predictive and intelligent discipline.

Here’s a good way to think about it: traditional automation is like a factory robot that's been programmed to do one specific task over and over. It's incredibly efficient, but if the product on the assembly line changes even a tiny bit, the robot grinds to a halt until a human steps in to reprogram it. AI in QA is like giving that robot a brain and eyes, letting it see and adapt to changes all on its own.

This new intelligence is driving huge growth in the QA industry. The global QA testing market is expected to grow at a compound annual rate of about 7% through 2032. This surge is almost entirely powered by the adoption of AI and ML, which are making the entire quality assurance testing process smarter, faster, and way more in tune with modern development speeds. You can check out more top QA trends at OnPath Testing.

The Rise of Intelligent and Self-Healing Tests

One of the most practical ways AI is making an impact is in test maintenance—a notoriously painful and time-consuming part of QA. Every developer knows the drill: someone changes a button's ID or moves an element on a page, and suddenly a bunch of automated test scripts break. A QA engineer then has to manually dig through the code, find the change, and update the script.

Self-healing tests are changing that game completely. By using AI, the testing tool can recognize that an element has changed but is still functionally the same thing. It then intelligently updates the test script on its own, saving countless hours previously wasted on tedious maintenance.

AI-driven testing isn’t about replacing testers; it’s about augmenting their abilities. It handles the repetitive, brittle aspects of test maintenance, allowing humans to focus on complex, creative problem-solving and exploratory testing.

This shift directly helps development velocity. Instead of constantly pausing to fix broken tests, teams can keep their momentum going, knowing their test suite can adapt on the fly. Of course, balancing this new speed with robust quality is a new challenge. If you're curious, we have a guide on the key trade-offs between code quality and delivery speed.

From QA to Quality Engineering

AI is also revolutionizing how we prioritize testing. Machine Learning models can sift through years of development data—code commits, bug reports, test results—and predict which parts of an application are most likely to have new bugs. This lets teams aim their limited testing resources exactly where they’ll have the biggest impact.

This evolution is pushing the role of QA professionals toward Quality Engineering. It’s a more proactive, data-driven role that’s all about building quality into the development process from the very start, not just checking for it at the end.

Tomorrow’s QA leaders will need a new set of skills:

- Data Analysis: To make sense of ML predictions and spot risk patterns.

- AI Tool Management: To configure and maintain intelligent testing platforms.

- Process Optimization: To weave AI-driven insights into the larger development lifecycle.

Ultimately, AI is turning the quality assurance testing process into a core strategic function. It gives teams the power to make smarter decisions, automate with intelligence, and build better software with more confidence and speed.

Essential Metrics for Measuring QA Success

So, how do you really know if your quality assurance process is working? Moving past a simple "it feels better" gut check means you need hard data. To prove the value of your QA efforts and—more importantly—to keep getting better, you have to track the right key performance indicators (KPIs).

Think of these metrics like the dashboard in your car. It doesn't just tell you if you're moving; it gives you the vital signs—speed, engine health, fuel level—that let you make smart decisions on the fly. A good QA dashboard does the same thing by telling a clear, data-backed story of your team's impact and efficiency.

Test Effectiveness Metrics

This first group of metrics gets right to the point: how good are we at finding bugs before they get to customers? They directly measure the quality and real-world impact of your testing.

- Defect Detection Percentage (DDP): This is the ultimate report card for your test suite. It compares the number of bugs your QA team found before a release to the total number of bugs found both internally and by users after release. A high DDP is a great sign.

- Defect Removal Efficiency (DRE): This is similar to DDP but focuses on how well the team fixes the problems it finds. It’s calculated by comparing the number of defects fixed before release against the total number of defects found.

When you see consistently high percentages for both DDP and DRE, you're looking at a mature and effective quality assurance process.

Test Efficiency and Process Health

Finding bugs is one thing, but finding them efficiently is another. These metrics look at the speed, cost, and overall health of your testing operations. They make sure your team isn't just effective, but also productive.

Metrics provide the objective language needed to communicate QA's value. A dashboard showing a 95% Defect Detection Percentage and a 50% reduction in Test Execution Time is far more compelling than simply saying, "We're doing a good job."

Here are a few key indicators of a healthy and efficient process:

- Average Test Execution Time: How long does it take to run your test suites? Tracking this helps you spot performance drags and really highlights the ROI of automation.

- Requirements Coverage: This metric answers the crucial question, "Are we testing everything we're supposed to?" It maps your test cases back to the initial business requirements, ensuring no feature gets left behind.

- Defect Age and Severity: How long do critical bugs just sit in the backlog? Tracking the age of defects, especially the high-severity ones, shines a light on bottlenecks in your development and resolution workflow.

Building a solid set of metrics is a lot like creating a detailed checklist for code reviews; it systematizes quality and makes sure nothing critical gets missed. For a great example of this in action, check out this code review checklist to see how structured processes drive better outcomes.

By combining metrics for effectiveness, efficiency, and overall process health, you get a complete, 360-degree view of your QA success.

Got a question about QA? You're not alone. When teams start getting serious about quality, a few common questions always seem to pop up. Let's tackle some of the big ones.

What’s the Right Tester-to-Developer Ratio?

Ah, the classic question. Everyone wants a magic number, but the truth is, there isn't one. The "right" ratio really boils down to your specific situation—things like how complex your product is, how experienced your dev team is, and how mature your test automation is.

A common starting point you’ll hear is around 1 QA engineer for every 5 to 10 developers. But treat that as a loose guideline, not a rule.

If you're building something incredibly complex or your team is just getting its feet wet with formal testing, you might need a tighter ratio, maybe closer to 1:3. On the flip side, if you have a rock-solid automation suite and a culture where developers own a lot of the testing, you can run much leaner. The real key is to start somewhere and then adjust based on what your defect metrics and release speed are telling you.

Should We Just Automate Everything?

In a word: no. This is one of the most common traps teams fall into. While automation is a huge lever for efficiency, trying to automate 100% of your tests is a recipe for wasted time and money. The goal should always be strategic automation, not total automation.

Focus your automation firepower on the repetitive, high-impact stuff. Think regression suites, performance checks, and other tests that run the same way every single time.

Manual testing is still king in areas that demand human creativity and intuition. Things like exploratory testing, usability checks, and just general ad-hoc bug hunting are where a skilled QA pro provides value that no script ever will.

The sign of a truly mature QA process is a smart balance, using both automation and manual expertise where they shine brightest.

We Have No QA Process. Where Do We Even Start?

If you're starting from absolute zero, the key is to build momentum with small, manageable steps. Don't try to roll out a massive, complicated framework all at once. You'll just overwhelm everyone.

Here’s a simple, three-step starting point:

- Start with Bug Tracking: Get a simple system in place to log every single bug you find. It doesn't have to be fancy—a Jira or Trello board is perfect. Just get it all written down.

- Introduce Basic Test Cases: For every new feature, start writing down simple, clear steps to test it. This is the seed of your future test library.

- Hold Bug Triage Meetings: Get your developers and QA folks in a room (virtual or otherwise) to review new bugs, decide what's important, and assign them out.

These first steps are all about building the core habits of communication and documentation. Get these right, and you'll have a solid foundation for any quality assurance testing process you build on top.

Stay on top of every code change without the noise. PullNotifier delivers clean, real-time pull request updates from GitHub directly to your Slack channels, cutting down on review delays and keeping your team synchronized. Join over 10,000 engineers who trust PullNotifier to streamline their code reviews.